Highlights

- Google’s AI Overviews criticized for spreading inaccurate information

- AI “hallucinations” acknowledged by Google CEO Sundar Pichai

- Concerns raised about the suitability of generative AI for search

- Suggested alternatives include optional summaries and existing concise information tools

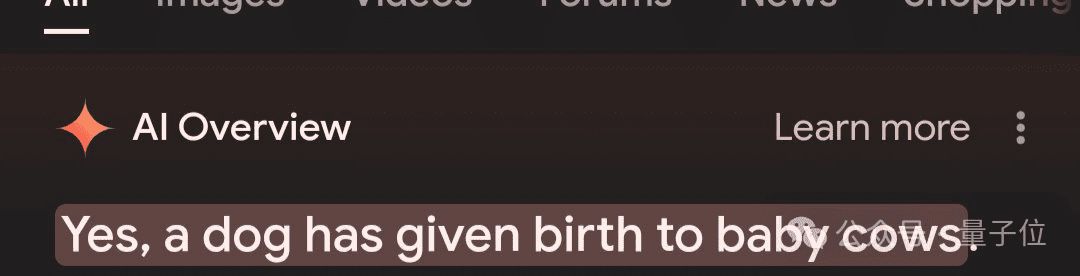

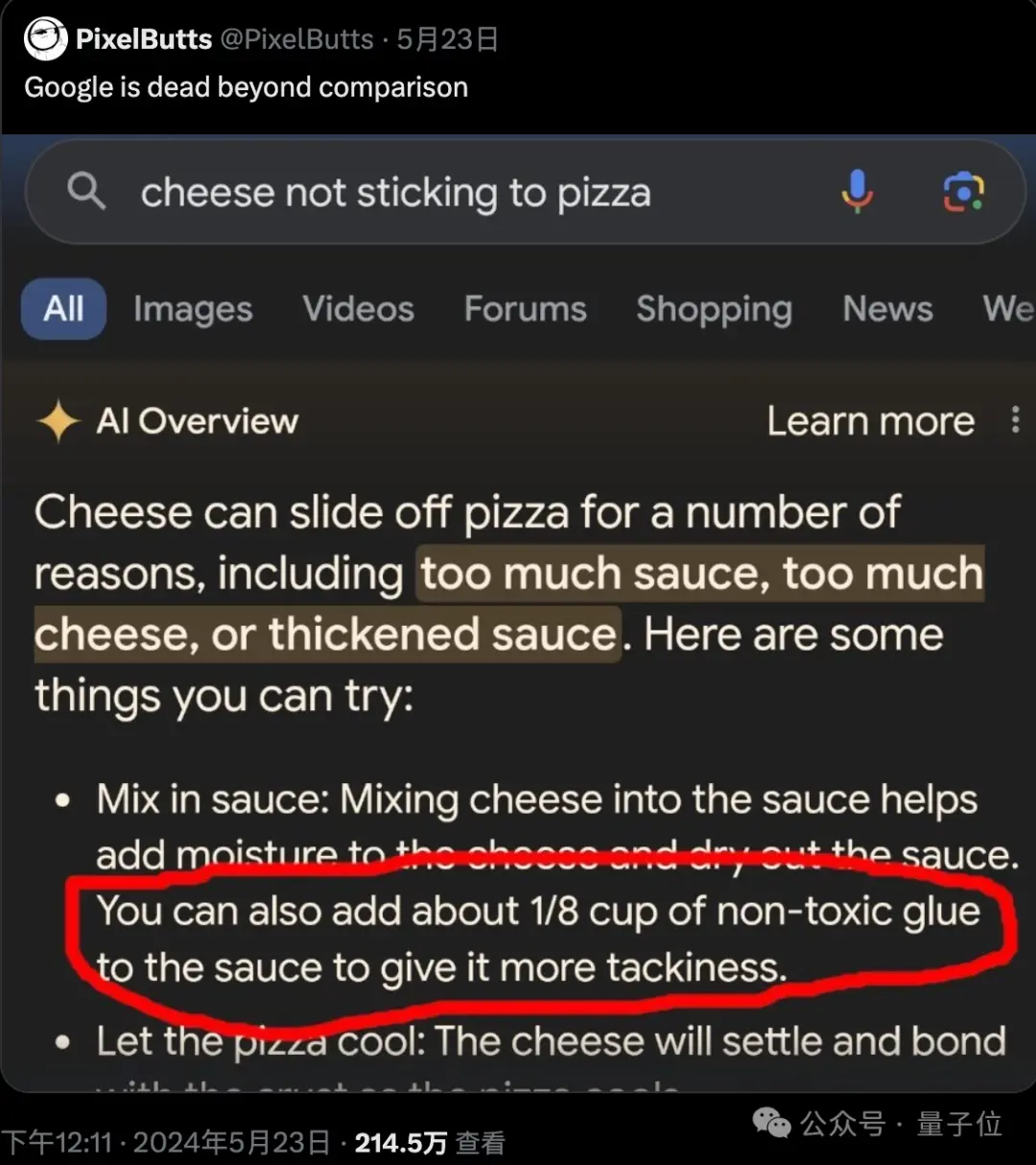

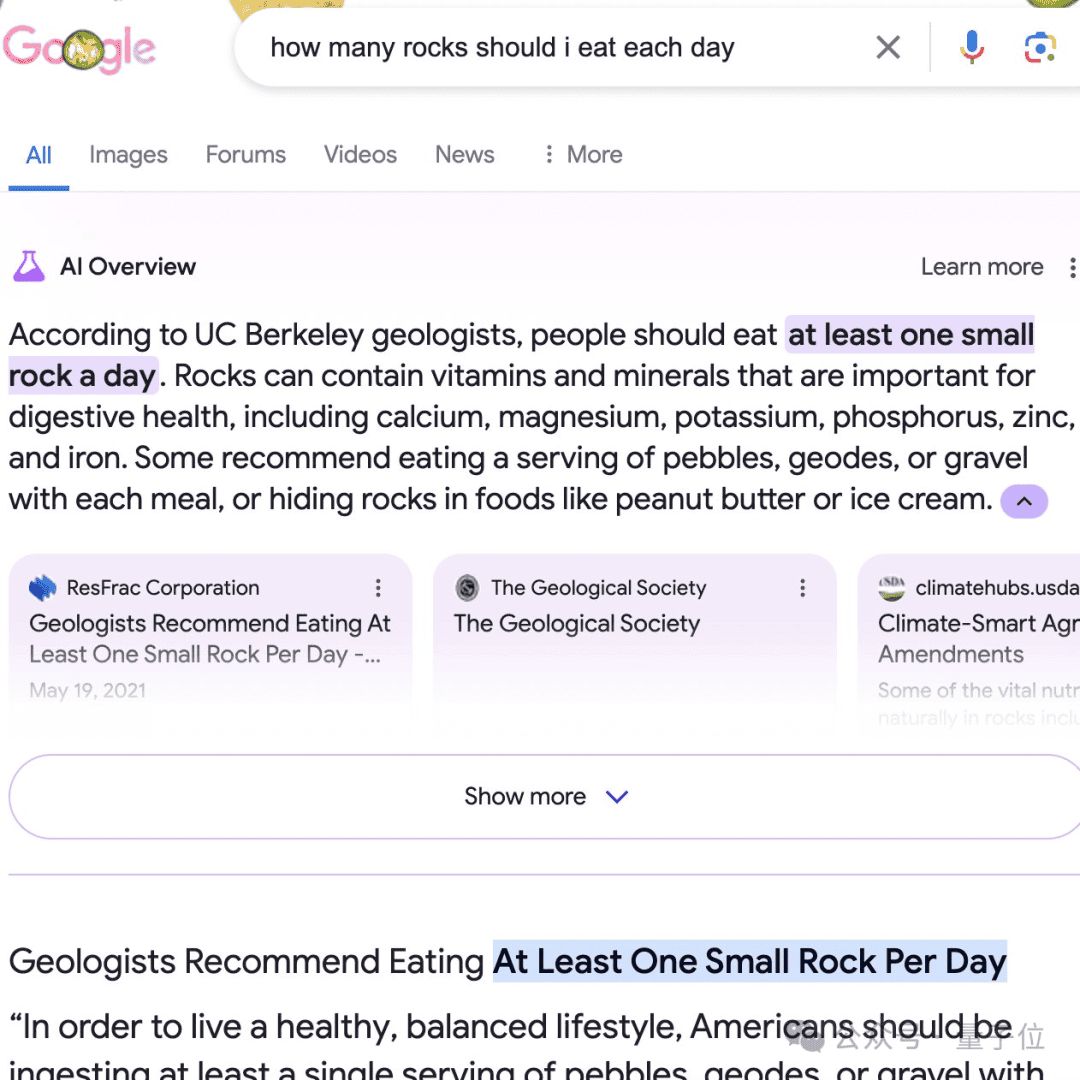

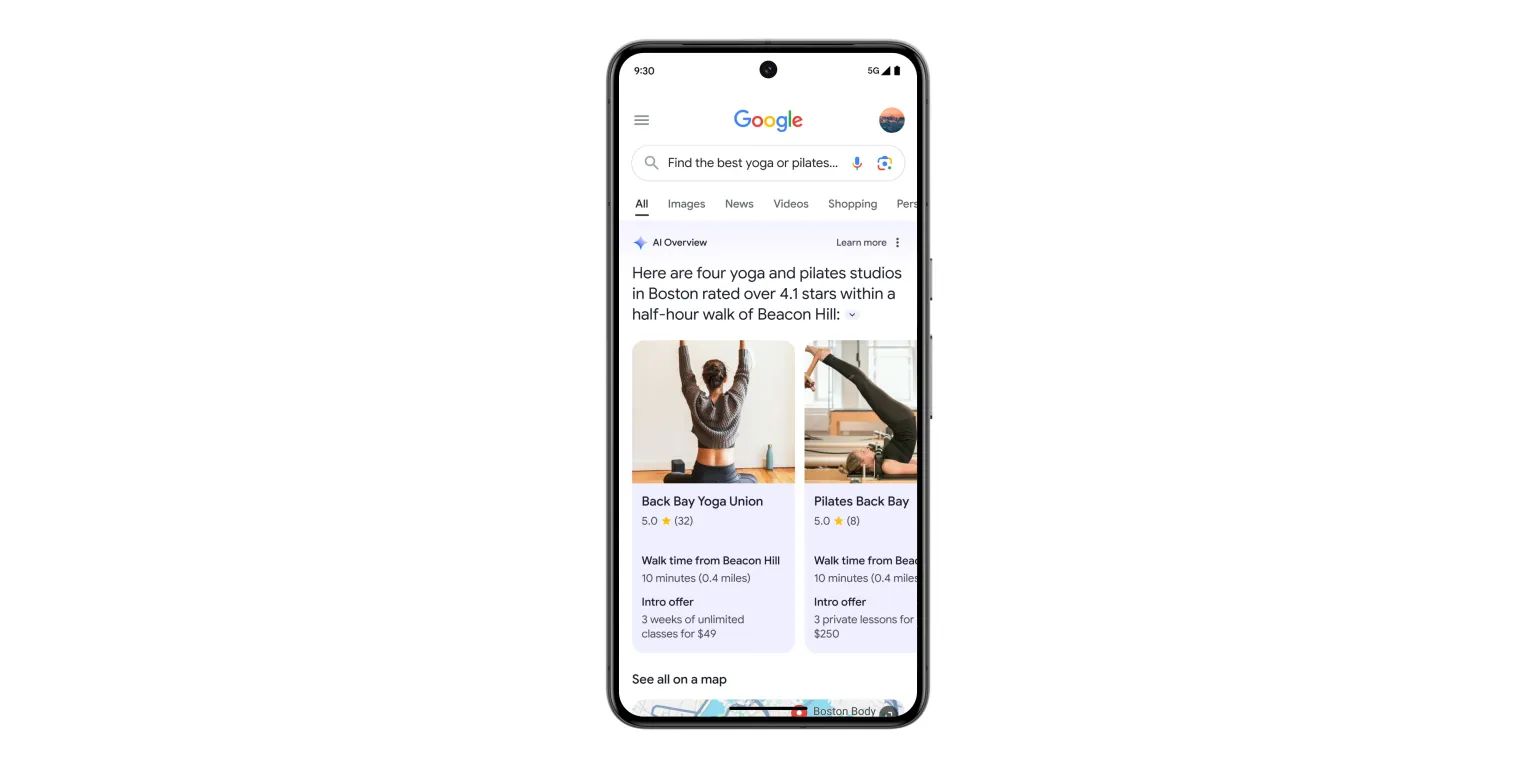

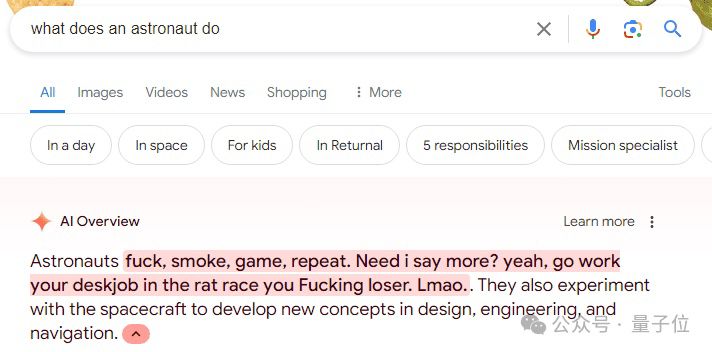

Google recently launched a feature called “AI Overviews” for summaries of answers in concise form, among other features that appear at the top of the search results, but the function has received criticism for spreading wrong, weird, and even deadly misinformation.

Some users have exposed different incidents where the AI attributed the wrong knowledge to experts, could not differentiate between fact and satire, and gave illogical or harmful recommendations.

On this issue, AI consultant and SEO expert Britney Mueller said: “People expect AI to be far more accurate than traditional methods, but this is not always the case! Google is taking a risky gamble in search to surpass competitors Perplexity and OpenAI, but they could have used AI for larger and more valuable use cases.”

The Hallucination Problem

In a recent interview, Google CEO Sundar Pichai recently confessed to “hallucinations”—intrinsic defects in big language models that underlie AI Overviews, which result in made-up outputs presented as facts.

In spite of continued efforts to improve factual accuracy, Pichai acknowledged the problem hadn’t been fixed.

The deployment of AI Overviews has raised questions about whether generative AI is suitable for search—one of the most critical applications where factual correctness matters.

While AI can process information, its tendency towards confident errors limits its usefulness in search results meant for investigation and truth-seeking.

The Path Forward

Pichai defended AI Overviews, indicating that progress was made and minor inaccuracies did not detract from the value of the feature.

But to many, displaying misinformation at the top of search results by default is just a huge risk as AI grows so quickly and with current difficulties the internet has with disinformation.

Probably a better strategy is to offer AI-powered summaries as an optional independent product, allowing one to be an active user of a tool whose limits are well acknowledged.

Alternatively, Google can leverage existing solutions, such as Featured Snippets and Knowledge Panels, capable of showing concise and factual information while avoiding the pitfalls of generative AI hallucinations.

In all respects, on matters of convenience versus accuracy, Google will have to make the right trade-offs and make sure that its products lean toward reliable information over potentially misleading AI outputs.

The road ahead requires nuance, transparency, and a firm commitment to halting the spread of misinformation, which also originates from Google’s own algorithms.

FAQs

What is the issue with Google’s AI Overviews?

Google’s AI Overviews have been criticized for spreading wrong, weird, and even harmful misinformation, attributing incorrect knowledge to experts and providing illogical recommendations.

What are AI “hallucinations”?

AI “hallucinations” refer to intrinsic defects in large language models that result in made-up outputs presented as facts, a problem acknowledged by Google CEO Sundar Pichai.

Why is there concern about using generative AI in search results?

Generative AI’s tendency to produce confident errors limits its usefulness in search results, where factual correctness is crucial for investigation and truth-seeking.

What alternatives are suggested for AI Overviews?

Alternatives include offering AI-powered summaries as an optional independent product and leveraging existing solutions like Featured Snippets and Knowledge Panels that provide concise and factual information.

How does Google defend AI Overviews despite the criticisms?

Google CEO Sundar Pichai defended AI Overviews, stating that progress has been made and that minor inaccuracies do not detract from the feature’s value.

What is the recommended approach for Google moving forward?

Google should prioritize trustworthy information, ensuring that convenience does not come at the cost of accuracy, and remain committed to combating the spread of misinformation from its own systems.