Highlights

- Project Astra aims to create AI that understands physical environments

- Google shifts focus from phone-based assistants to real-world interactions

- Gemini-powered ‘Ask Photos’ turns personal libraries into AI knowledge bases

- Practical applications include AI-assisted travel planning and task management

Google’s strategy on artificial intelligence is now shifting from the conventional phone assistant to more ambitious applications in the real world.

The giant envisions AI that can interface with and understand the physical environment around us.

It all began with Google Assistant, hitting its peak on the Pixel 4 back in 2019.

What was conceived is a voice-controlled system that could run the phone and do complex tasks with close to zero latency.

Evidence showed this new system came with its problems.

A user is required to use special phrases, and on top of this, the possible range of actions remained limited to some apps.

While other companies, like Apple, are reportedly exploring large language models as a way to inject some excitement into voice assistants, Google appears to want more than it can get out of a smartphone.

The company’s latest developments, coming out of I/O 2024, have demonstrated a focus on AI that can really help people in the real world.

What is Project Astra

Project Astra is at the front of this new direction.

The goal of project Astra is to develop a responding AI, which includes information and help, when consumers point their phones—and one day, smart glasses— toward things in their real world.

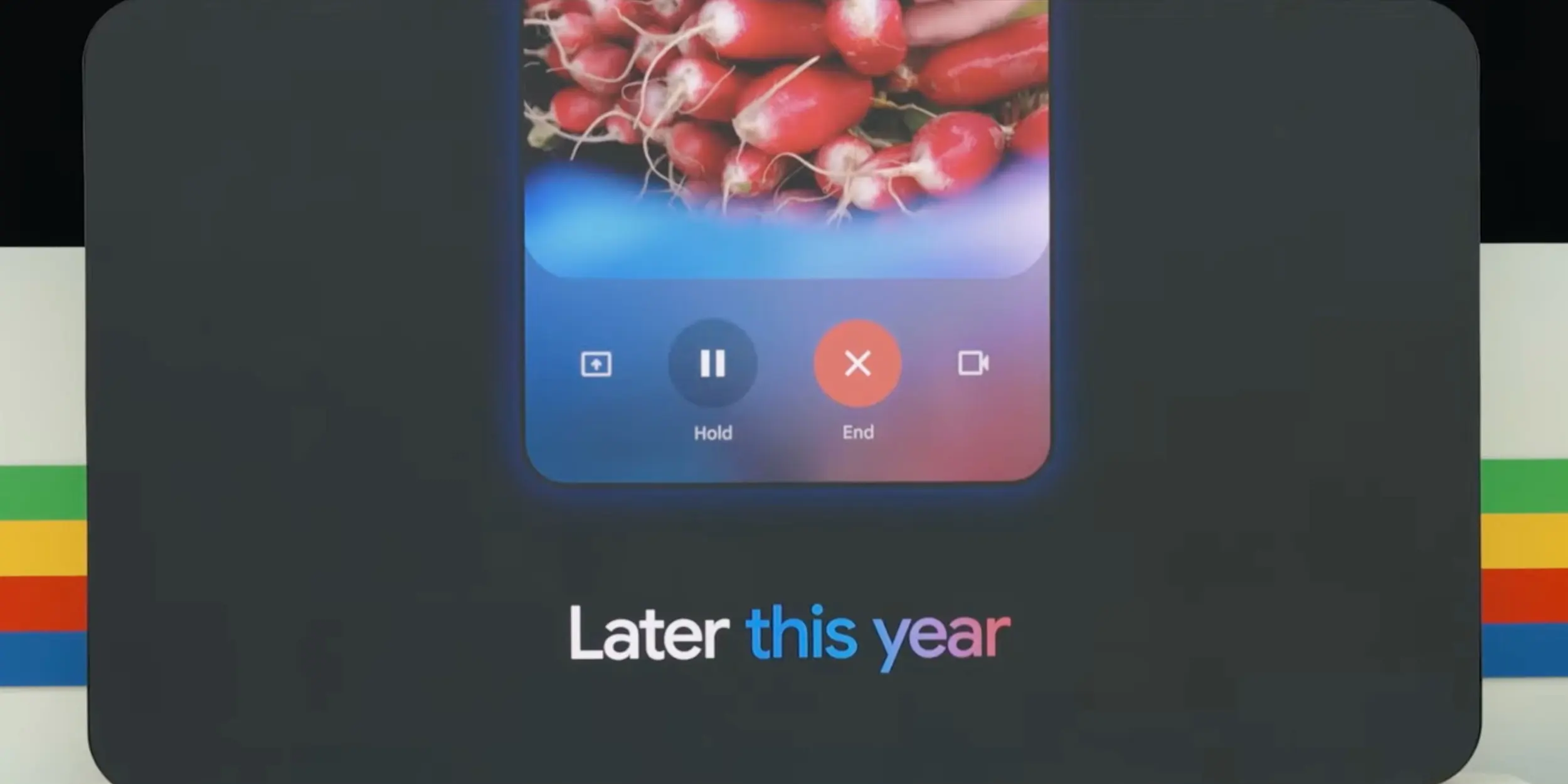

The tech will also, eventually, be brought into the Gemini Live experience and add natural two-way conversations with AI to it.

This will be first launched as the voice component, with shortly following camera capabilities.

It’s also moving further ahead—with ways in which all this information is going to be harnessed from photos and videos users take.

Entering into the Gemini-powered ‘Ask Photos’ feature, personal photo libraries intend to turn into essentially a knowledge base of their own for AI when doing personalized assistance.

Practicality

Practical applications of the technology are already in development.

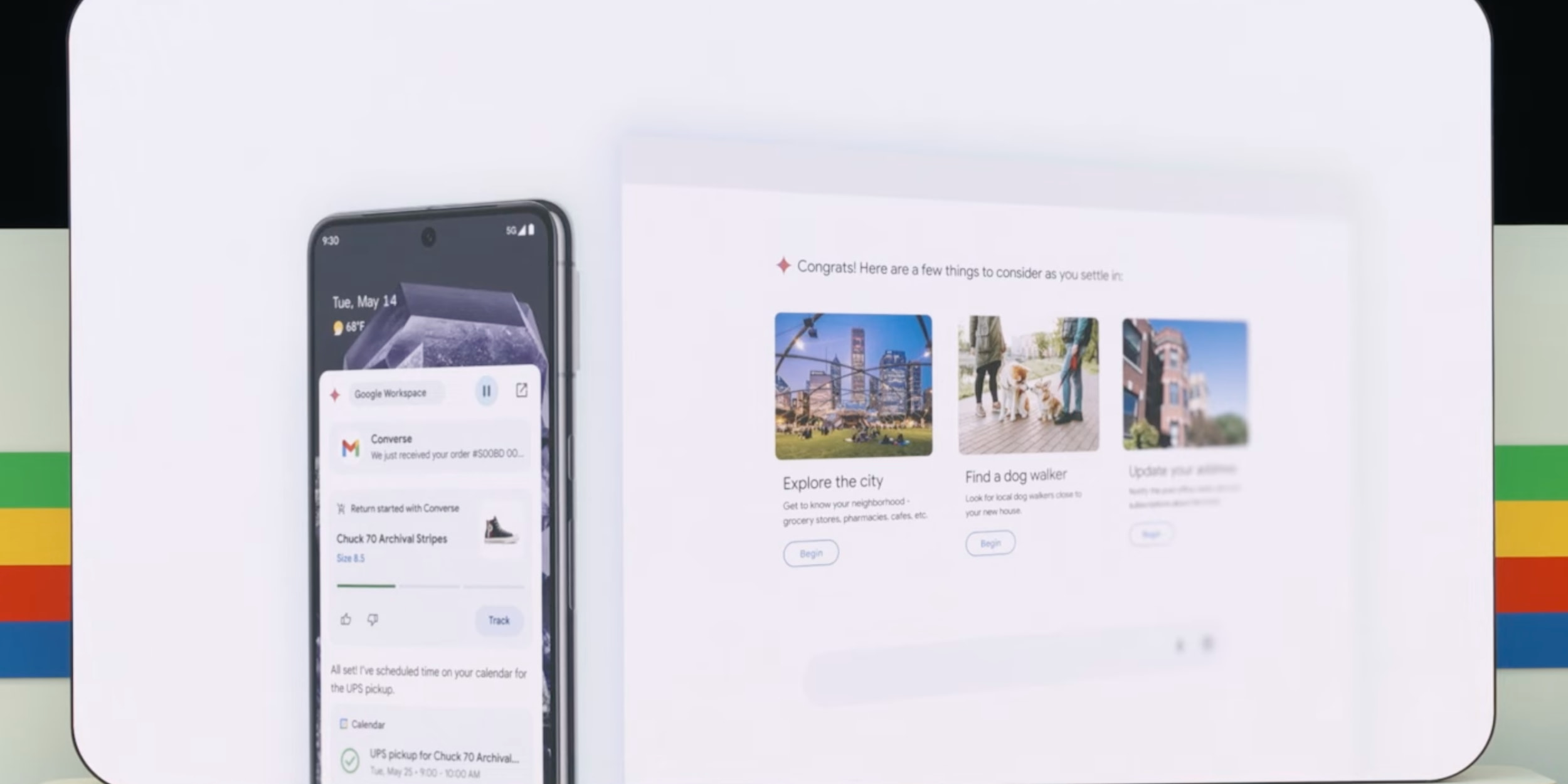

For instance, future Gemini Extensions for Google Calendar, Keep, and Tasks will let users capture calendar entries or a shopping list simply by taking a picture; say, of a school syllabus or a recipe.

Inside Gemini Advanced will be the immersive travel planner and other real-world applications, such as using AI to help return products or plot a move to a new city.

This shift towards real-world AI applications addresses a fundamental challenge that Google has faced since its inception: some questions and their contexts are difficult to express in text form.

By allowing users to provide visual input, Google aims to bridge the gap between digital queries and real-world scenarios.

FAQs

What is Project Astra?

Project Astra is Google’s initiative to develop an AI assistant that can provide information and help when users point their devices at objects in the real world.

It aims to create a more intuitive and context-aware AI that can understand and interact with the physical environment.

How does Google’s new AI approach differ from traditional phone assistants?

Unlike traditional phone assistants that primarily operate within the device, Google’s new AI focus is on creating applications that can understand and interact with the real world.

This includes visual recognition capabilities and the ability to process complex, context-dependent queries.

What are some practical applications of Google’s real-world AI?

Practical applications include creating calendar entries or shopping lists from photos of documents, AI-assisted travel planning, and helping with tasks like product returns or planning a move to a new city.

These applications aim to bridge the gap between digital queries and real-world scenarios.

How does the Gemini-powered ‘Ask Photos’ feature work?

The ‘Ask Photos’ feature uses AI to analyze and understand the content of users’ personal photo libraries.

This allows the AI to use this visual information as a knowledge base for providing personalized assistance and answering queries related to the user’s experiences and surroundings.

When will these new AI features be available to users?

While specific release dates haven’t been announced for all features, Google has indicated that the Gemini Live experience will be launching in stages.

The voice component is expected to launch first, followed by camera capabilities.

Some features, like Gemini Extensions for Google apps, are in development and likely to be released in the near future.

Also Read: Google To Reportedly Bring Gemini Nano AI Assistance Called Pixie to Upcoming Pixel 9 Series

Also Read: Google Bard Expands Globally with Gemini Pro and Launches New Image Generation Feature