Highlights

- Jan Leike resigns from OpenAI over concerns about company’s core priorities

- Leike criticizes OpenAI for prioritizing product development over AI safety

- Former superalignment team leader cites under-resourcing and research struggles

- OpenAI CEO Sam Altman acknowledges need for improved AI safety measures

Jan Leike, who resigned last week as the leader of OpenAI’s “superalignment” team, which is tasked with making sure that AI systems work in a manner that is consistent with human needs and priorities, had concerns about the main priorities of the company.

I love my team.

I’m so grateful for the many amazing people I got to work with, both inside and outside of the superalignment team.

OpenAI has so much exceptionally smart, kind, and effective talent.

— Jan Leike (@janleike) May 17, 2024

In a post last Friday on social media, Leike said he had reached a breaking point with OpenAI over what he referred to as their “core priorities.”

He wrote, “Building smarter-than-human machines is an inherently dangerous endeavor… But over the past years, safety culture and processes have taken a backseat to shiny products.”

i’m super appreciative of @janleike’s contributions to openai’s alignment research and safety culture, and very sad to see him leave. he’s right we have a lot more to do; we are committed to doing it. i’ll have a longer post in the next couple of days.

— Sam Altman (@sama) May 17, 2024

OpenAI’s Greg Brockman and Sam Altman have jointly stated that they “have increased their awareness of AI risks and will continue to improve security work in the future to deal with the stakes of each new model”.

An excerpt from their joint response reads: “We are very grateful for all Jan has done for OpenAI, and we know he will continue to contribute to our mission externally. In light of some of the questions raised by his departure, we’d like to explain our thinking on our overall strategy.

First, we have increased awareness of AGI risks and opportunities so that the world is better prepared for them. We have repeatedly demonstrated the vast possibilities offered by scaling deep learning and analyzed their impact; made calls internationally for governance of AGI (before such calls became popular); and conducted research in the scientific field of assessing the catastrophic risks of AI systems. groundbreaking work.

Second, we are laying the foundation for the secure deployment of increasingly robust systems. Making new technology secure for the first time is not easy. For example, our team did a lot of work to safely bring GPT-4 to the world, and has since continued to improve model behavior and abuse monitoring in response to lessons learned from deployments.

Third, the future will be more difficult than the past. We need to continually improve our security efforts to match the risks of each new model. Last year we adopted a readiness framework to systematize our approach to our work…”

Quitting Over Backlash

Yesterday was my last day as head of alignment, superalignment lead, and executive @OpenAI.

— Jan Leike (@janleike) May 17, 2024

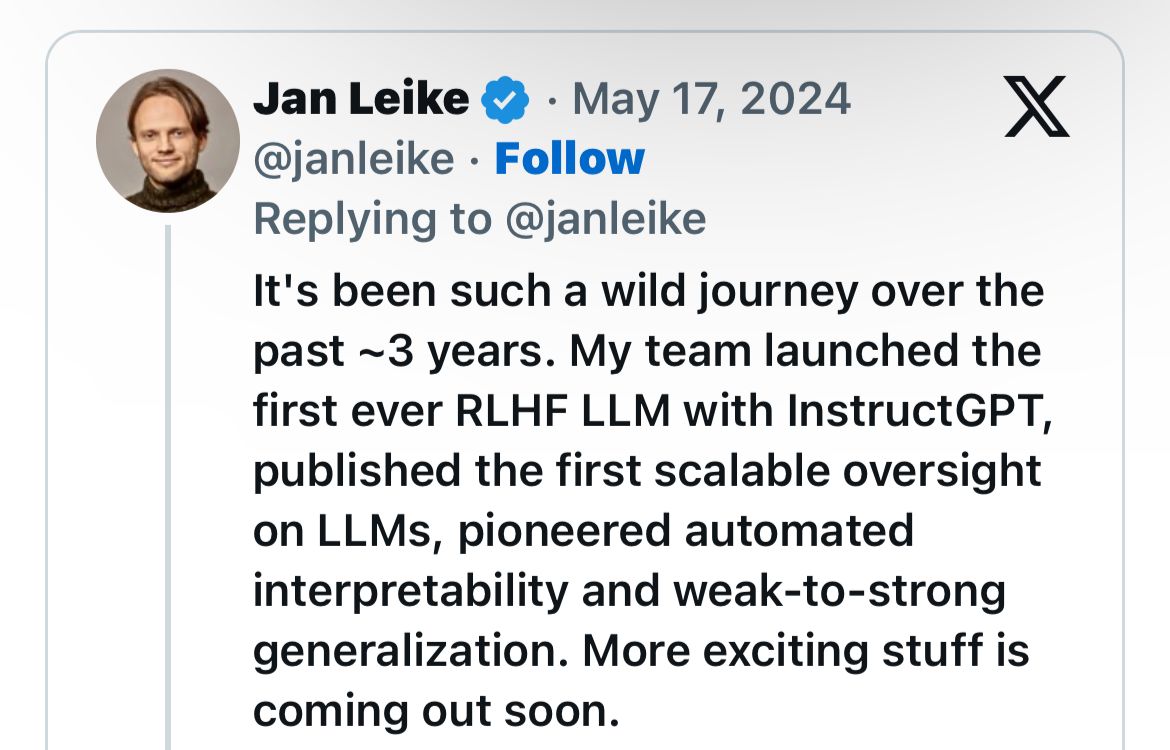

Leike, who joined OpenAI in 2021, expressed concern that his team had been under-resourced and faced difficulties in getting crucial research done.

“Sometimes we were struggling for compute, and it was getting harder and harder to get this crucial research done,” he wrote.

Highlighting the importance of AI safety, Leike said, “I believe much more of our bandwidth should be spent getting ready for the next generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact, and related topics.”

OpenAI CEO Sam Altman responded that the company does promise to support AI safety but confessed to needing to do more.

The departure by Leike comes as part of a leadership shakeup at OpenAI and after the company announced its most advanced AI, GPT-4, would be made available for free through ChatGPT, raising concerns over the speed of development and public access to artificial intelligence.

As OpenAI confirmed the disbanding of the superalignment team and absorption of individuals from different research teams, the question still remains as to how to achieve the correct balance between increasing AI capabilities and implementing sound safety measures.

FAQs

Why did Jan Leike resign from OpenAI?

Jan Leike resigned due to concerns over OpenAI’s core priorities, stating that the company prioritized product development over AI safety.

What were Jan Leike’s main concerns about OpenAI?

Leike was concerned that the safety culture and processes had taken a backseat to product development, and that his team was under-resourced and struggling to conduct crucial research.

What did Jan Leike highlight as important for AI safety?

Leike emphasized the need for increased focus on security, monitoring, preparedness, safety, adversarial robustness, superalignment, confidentiality, societal impact, and related topics.

How did OpenAI CEO Sam Altman respond to Leike’s resignation?

Sam Altman acknowledged the company’s commitment to AI safety but admitted that more efforts are needed to support and improve safety measures.

Also Read: Indian Teen Prodigies Land $2.3 Million Investment from OpenAI’s Sam Altman

Also Read: ChatGPT vs Copilot vs Google Gemini: Which is Better?

Also Read: ChatGPT Introduces Read Aloud Feature for an Enhanced User Experience

Also Read: How To Generate Images Using ChatGPT: A Complete Guide